AI Bias Detection and Mitigation Techniques: Ensuring Fairness in Machine Learning

Artificial intelligence (AI) has transformed various industries, offering solutions that improve efficiency, decision-making, and personalization. However, the increasing reliance on AI has revealed a pressing issue: bias. AI systems, which learn from data, often inherit and even amplify biases present in their training datasets. This can lead to unfair outcomes, discrimination, and a lack of trust in AI systems. Addressing bias is crucial for the ethical and equitable deployment of AI technologies.

This article explores how AI bias occurs, its potential consequences, and the techniques used to detect and mitigate bias in AI systems. By addressing these challenges, we can ensure that AI technologies serve all individuals fairly and equitably.

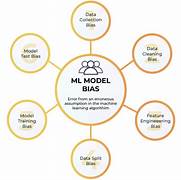

Understanding AI Bias

AI bias occurs when an AI system produces unfair or prejudiced outcomes due to biases present in the data, algorithms, or design. Bias in AI can manifest in various forms, including:

- Data Bias: Training datasets may reflect historical prejudices or imbalances. For example, facial recognition systems trained predominantly on lighter-skinned faces often perform poorly on darker-skinned individuals.

- Algorithmic Bias: The design or structure of an algorithm may inadvertently favor certain outcomes. This can happen when optimization criteria prioritize accuracy for the majority group while neglecting minority groups.

- User Bias: Human biases from developers or end-users can influence how AI systems are designed, trained, or applied.

Bias can lead to real-world consequences, such as discriminatory hiring practices, unequal access to financial services, or racial profiling in law enforcement. Detecting and mitigating these biases is critical to ensuring that AI technologies promote fairness and inclusivity.

Techniques for Detecting AI Bias

Detecting bias in AI systems is the first step toward addressing the issue. Below are some of the most effective techniques for identifying bias:

1. Data Auditing

- What it is: A thorough examination of the training dataset to identify imbalances or representation gaps.

- How it works: Analysts examine data distributions to ensure that all demographic groups are fairly represented. For example, a dataset for a hiring algorithm should include diverse examples across gender, race, age, and other factors.

- Tools: Tools like Fairlearn and AI Fairness 360 offer features to audit datasets for bias.

2. Bias Metrics

- What it is: Using statistical measures to assess bias in AI models.

- How it works: Metrics such as demographic parity, equalized odds, and disparate impact measure how the model’s predictions differ across groups. For example:

- Demographic parity: Ensures that positive outcomes (e.g., loan approvals) are equally distributed across groups.

- Equalized odds: Verifies that the model performs equally well for all groups, minimizing disparities in accuracy or false positives/negatives.

- Tools: Open-source tools like Themis and IBM Watson OpenScale help calculate these metrics.

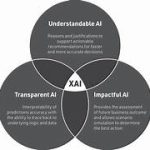

3. Explainability Techniques

- What it is: Methods to make AI decisions transparent and understandable.

- How it works: Techniques like SHAP (Shapley Additive Explanations) or LIME (Local Interpretable Model-agnostic Explanations) provide insights into how an AI model reaches its conclusions. This can help identify whether certain features (e.g., gender or ethnicity) disproportionately influence outcomes.

4. Bias Testing Frameworks

- What it is: Frameworks designed to test AI systems for biased behavior.

- How it works: Developers simulate scenarios with varied inputs to observe how the model performs. For example, testing a hiring algorithm with identical resumes but different genders to check for disparities in recommendations.

- Tools: Frameworks like Google’s What-If Tool allow for scenario testing to uncover potential biases.

Techniques for Mitigating AI Bias

Once bias is detected, mitigation techniques can be applied to address and reduce its impact. These techniques span across the data, algorithm, and post-deployment stages.

1. Pre-Processing Techniques

- What it is: Adjusting the training data before feeding it into the AI model.

- Methods:

- Data Augmentation: Adding synthetic data to underrepresented groups to balance the dataset.

- Re-sampling: Over-sampling minority groups or under-sampling majority groups to ensure equitable representation.

- Data Anonymization: Removing sensitive features (e.g., race or gender) to prevent the model from learning biases associated with those attributes.

- Example: In a credit scoring model, ensuring equal representation of different income groups can prevent discriminatory outcomes.

2. In-Processing Techniques

- What it is: Modifying the learning process of the AI model to reduce bias.

- Methods:

- Fairness Constraints: Adding constraints to the optimization function to ensure fairness metrics are met during training.

- Adversarial Debiasing: Training adversarial networks to identify and mitigate bias in the model. The main model tries to predict outcomes, while an adversarial model identifies biases, forcing the main model to reduce them.

- Example: A hiring algorithm can be trained with fairness constraints to ensure equal selection rates for all genders.

3. Post-Processing Techniques

- What it is: Adjusting model outputs after training to achieve fairness.

- Methods:

- Reweighting Outputs: Adjusting the importance of predictions to ensure equitable outcomes across groups.

- Threshold Adjustments: Setting different decision thresholds for different groups to balance performance.

- Example: In a loan approval system, adjusting the acceptance threshold for underrepresented groups to counteract historical discrimination.

4. Human-in-the-Loop Systems

- What it is: Involving human oversight in the decision-making process.

- How it works: Human reviewers evaluate model decisions, especially in high-stakes scenarios like hiring or law enforcement, to ensure fairness and mitigate unintended biases.

- Example: A hiring AI shortlists candidates, but final decisions are reviewed by a diverse hiring committee.

5. Algorithmic Approaches

- What it is: Designing algorithms specifically to minimize bias.

- Examples:

- Fair Machine Learning Algorithms: Algorithms like Fair-SMOTE generate synthetic examples for underrepresented groups.

- Reinforcement Learning for Fairness: Using reinforcement learning to optimize fairness metrics during training.

Challenges in Bias Detection and Mitigation

Despite advancements in bias detection and mitigation techniques, several challenges remain:

- Subjectivity of Fairness: Fairness is context-dependent and varies across cultures, industries, and stakeholders. What is considered fair in one scenario may not be applicable in another.

- Trade-offs Between Fairness and Accuracy: Striving for fairness may reduce model accuracy or efficiency, especially when datasets are limited or unbalanced.

- Lack of Representative Data: Collecting diverse and representative data remains a challenge, particularly in regions or groups with limited digital access.

- Complexity of Bias: Bias can be subtle and multi-faceted, making it difficult to detect and address comprehensively.

The Path Forward: Building Fair AI Systems

To ensure that AI systems are fair and unbiased, a multi-pronged approach is required:

- Interdisciplinary Collaboration: Involving experts from fields like ethics, sociology, and law to guide the development of AI systems.

- Continuous Monitoring: Bias detection and mitigation should not be a one-time effort. Regular audits and updates are necessary to address new challenges as AI systems evolve.

- Transparency and Accountability: Organizations must adopt transparent practices, clearly documenting how their AI systems are designed, trained, and tested for fairness.

- Regulatory Compliance: Adhering to legal frameworks like GDPR, which emphasize fairness and non-discrimination in AI systems.

- Public Awareness: Educating users about the potential biases in AI systems and empowering them to challenge unfair outcomes.

Conclusion

Bias in AI systems is a critical issue that can undermine the benefits of AI and perpetuate inequalities. By employing robust techniques for detecting and mitigating bias, we can build AI systems that are fair, inclusive, and trustworthy. The journey to bias-free AI is not without challenges, but with collaboration, transparency, and a commitment to fairness, we can ensure that AI technologies serve as a force for good, promoting equity and justice in society.