Developing Explainable and Transparent AI Models: Building Trust in Artificial Intelligence

Artificial intelligence (AI) has become a transformative force across industries, powering everything from healthcare diagnostics to financial services and autonomous vehicles. However, as AI systems grow more sophisticated, their decision-making processes often become opaque—a phenomenon known as the “black box” problem. This lack of transparency raises critical questions about trust, accountability, and ethical use, especially in high-stakes applications.

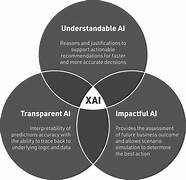

Explainable AI (XAI) and transparent AI models aim to address these concerns by making AI systems more understandable to users and stakeholders. This article explores the importance of explainability and transparency in AI, the challenges involved, and the methods used to achieve these goals.

Why Explainability and Transparency Matter in AI

1. Building Trust

Transparency fosters trust between AI systems and users. When individuals understand how an AI system arrives at its conclusions, they are more likely to accept and rely on its decisions. This is particularly crucial in sectors like healthcare, where trust is paramount.

2. Ensuring Accountability

Transparent AI systems make it easier to identify and address errors, biases, or unethical outcomes. This accountability is essential for maintaining ethical standards and ensuring compliance with regulations.

3. Facilitating Compliance

Regulations like the General Data Protection Regulation (GDPR) in the European Union mandate that AI systems provide explanations for their decisions. Transparent AI models help organizations meet these legal requirements.

4. Empowering Users

Explainable AI enables users to challenge, question, or verify the outputs of AI systems. This empowerment is critical in scenarios where AI decisions have a significant impact, such as credit approvals or criminal sentencing.

Challenges in Developing Explainable and Transparent AI Models

Despite the clear benefits, achieving explainability and transparency in AI systems is not without its challenges:

1. Complexity of Modern AI

Advanced AI models, such as deep neural networks, are inherently complex, with millions or even billions of parameters. Simplifying these models without sacrificing performance is a significant challenge.

2. Trade-Offs Between Accuracy and Explainability

Highly accurate AI models are often less interpretable. Simplifying a model to make it explainable may lead to a loss in predictive power.

3. Context-Dependent Explanations

What constitutes a “good explanation” varies depending on the audience. A technical explanation may be suitable for data scientists but not for end-users or policymakers.

4. Lack of Standardization

The field of explainable AI is still evolving, and there is no universal standard for measuring or ensuring explainability and transparency.

Techniques for Developing Explainable AI Models

Several techniques have been developed to make AI models more explainable and transparent. These techniques can be broadly categorized into pre-model, in-model, and post-model approaches.

1. Pre-Model Techniques

These methods focus on ensuring transparency before the AI model is built.

- Data Transparency: Clearly documenting the sources, structure, and preprocessing of data ensures that stakeholders understand what the model is learning from.

- Feature Selection: Using fewer, more interpretable features makes the model easier to explain. For example, in a credit scoring model, focusing on features like income and payment history rather than opaque metrics can improve transparency.

2. In-Model Techniques

These methods involve designing inherently interpretable models.

- Rule-Based Models: Algorithms like decision trees or rule-based systems provide clear, human-readable decision paths.

- Linear Models: Linear regression and logistic regression are transparent because they explicitly show how input features contribute to the output.

- Sparse Models: Sparse algorithms limit the number of features used, making the model easier to interpret.

3. Post-Model Techniques

Post-model techniques are applied after the AI system is built to make its decisions more understandable.

- SHAP (Shapley Additive Explanations): This method assigns a value to each feature, showing how much it contributes to a specific prediction.

- LIME (Local Interpretable Model-Agnostic Explanations): LIME explains individual predictions by approximating the model with a simpler, interpretable model around the specific instance being analyzed.

- Saliency Maps: In computer vision, saliency maps highlight which parts of an image contributed most to the AI’s decision.

- Counterfactual Explanations: These explain what changes to the input would result in a different outcome. For example, in a loan application, the explanation might state, “If your annual income were $5,000 higher, the loan would be approved.”

Ensuring Transparency in AI Models

Beyond explainability, transparency involves making the entire AI lifecycle—data, algorithms, and decision processes—open and understandable.

1. Transparent Data Practices

- Data Documentation: Clearly documenting data sources, preprocessing steps, and potential biases ensures that stakeholders understand the foundation of the AI system.

- Bias Auditing: Regular audits to identify and mitigate biases in the training data are crucial for building equitable AI systems.

2. Transparent Algorithm Design

- Open-Source Algorithms: Sharing algorithmic details or even source code allows external experts to review and validate the model.

- White-Box Models: Unlike black-box models, white-box models provide insight into their internal workings.

3. Transparent Decision Processes

- Audit Trails: Maintaining a record of how decisions were made allows for accountability and easier troubleshooting.

- User-Friendly Interfaces: Providing clear, jargon-free explanations of decisions in user-facing systems builds confidence and understanding.

Case Studies: Explainable and Transparent AI in Action

1. Healthcare

IBM Watson for Oncology uses explainable AI to provide treatment recommendations. The system presents evidence for its decisions, enabling doctors to evaluate and trust its suggestions.

2. Financial Services

FICO, a credit scoring company, has developed explainable AI models that provide detailed explanations for credit scores, helping consumers understand and improve their financial standing.

3. Autonomous Vehicles

Tesla and Waymo are incorporating explainability into their AI systems to help engineers and regulators understand how decisions are made in critical driving scenarios.

The Path Forward: Best Practices for Explainable and Transparent AI

1. Adopt a Human-Centric Approach

Design AI systems with the end-user in mind. Tailor explanations to the audience, ensuring they are understandable and actionable.

2. Integrate Explainability Early

Incorporate explainability into the AI development lifecycle from the outset, rather than treating it as an afterthought.

3. Collaborate Across Disciplines

Work with ethicists, sociologists, and domain experts to address the broader implications of AI decisions and ensure transparency.

4. Leverage Emerging Standards

Adopt emerging frameworks and guidelines, such as those from the European Commission or the IEEE, to ensure consistent and ethical practices.

5. Foster Continuous Learning

Explainable AI is an evolving field. Stay updated on new techniques, tools, and best practices to continually improve transparency.

Conclusion

Developing explainable and transparent AI models is not just a technical challenge but an ethical imperative. As AI becomes increasingly integrated into our lives, ensuring that its decisions are understandable and fair is crucial for building trust and accountability. By leveraging advanced techniques and fostering collaboration across disciplines, we can create AI systems that are not only powerful but also transparent, equitable, and aligned with human values.